A Taxonomy of Orchestral Grouping Effects Derived from Principles of Auditory Perception

A Taxonomy of Orchestral Grouping Effects Derived from Principles of Auditory Perception

by Stephen McAdams, Meghan Goodchild, and Kit Soden

Copyright © 2022 Society for Music Theory | Link to original publication

This item appeared in Music Theory Online in Volume 28, Number 3 on September 28, 2022.

It was authored by Stephen McAdams (contact: stephen.mcadams[at]mcgill.ca), Meghan Goodchild, and Kit Soden, with whose written permission it is reprinted here.

ABSTRACT: The study of timbre and orchestration in symphonic music research is underexplored, and few theories attempt to explain strategies for combining and contrasting instruments and the resulting perception of orchestral structures and textures. An analysis of orchestration treatises and musical scores reveals an implicit understanding of auditory grouping principles by which many orchestration techniques give rise to predictable perceptual effects. We present a novel theory formalized in a taxonomy of devices related to auditory grouping principles that appear frequently in Western orchestration practices from a range of historical epochs. We develop three classes of orchestration analysis categories: concurrent grouping cues result in blended combinations of instruments; sequential grouping cues result in melodic lines, the integration of surface textures, and the segregation of melodies or stratified (foreground and background) layers based on acoustic (dis)similarities; segmental grouping cues contrast sequentially presented blocks of materials and contribute to the creation of perceptual boundaries. The theory predicts orchestration-based perceptual structuring in music and may be applied to music of any style, culture, or genre.

KEYWORDS: auditory grouping, auditory stream, instrument blends, orchestration, segmentation, segregation, stratification, surface texture, timbral contrast, timbre

Introduction

[1.1] One of the most complex, mysterious, and dazzling aspects of music is the use of timbre in combination with other musical parameters to shape musical structures and to impart expressive, and even emotional, impact through techniques of orchestration. Orchestration, in our broad definition, involves the skillful selection, combination, and juxtaposition of instruments at different pitches and dynamics to achieve a particular sonic goal. This definition could be extended from “instruments” to “sounds” more generally to encompass the voice, as well as recorded and electroacoustic sound sources.

[1.2] In music scholarship historically, orchestration and timbre have been treated as having a secondary role.[1] The transmission of knowledge of orchestration practices has been largely confined to a master-apprentice model, with little systematic research. [2] To derive traces of what might be considered orchestration theory, one must scour passages of treatises on instrumentation and orchestration that provide general advice based on dependable conventions rather than foundational principles, as no clear taxonomies and hierarchical relationships have been established. Ultimately, students of composition and orchestration must study a multitude of scores, combined with listening as widely as possible to orchestral music, in order to derive knowledge based on a multitude of exemplars. A consequence of this situation is that expert knowledge of orchestration practice is acquired implicitly through experience—more knowing what to do than knowing why one is doing it, which is the realm of theory.

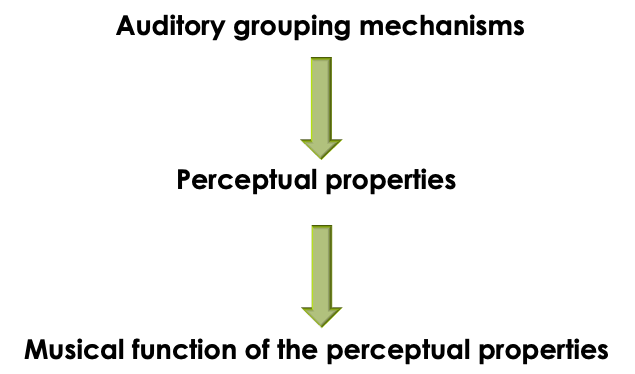

[1.3] In this paper, we examine the role of auditory grouping principles in orchestration practice and explore how they can provide a basis for developing a perception-based approach to orchestration analysis. We propose a taxonomy of orchestration devices related to auditory grouping principles with the aim of providing tools to complement other traditional music-analytic and music-theoretic approaches usually focused on pitch and/or rhythm. The goal of this perceptual foundation is to help music scholars understand which orchestration techniques can be used to achieve certain perceptual effects of orchestration practice based on auditory grouping (expanding work by Beavers 2019, 2021; Bhogal 2020; and Lochhead 2005). An additional goal is to explore why certain techniques work perceptually and what acoustic properties determine the strength of the resulting effect, as well as how they provide a basis for analyzing musical structuring through orchestration. Evidently, auditory grouping processes do not tell the whole story of what transpires in orchestration—think of the perceptual and affective qualities of timbre and texture that emerge from such groupings and of the establishment of structural links across time with timbral patterns, which give rise to musical meaning (Beavers 2019). However, auditory grouping creates the basic building blocks of musical structure in the mind of the listener, which then give rise to emergent perceptual qualities that can acquire musical functions and engender meaning within the musical context (Example 1). Therefore, perceptual grouping is an appropriate place to start.

Example 1. Auditory grouping gives rise to events from which perceptual properties are extracted, which then acquire musical functions within a given sonic context

[click to enlarge]

[1.4] In the following section, we explore the rarely theorized nature of orchestration practice and consider the notion of structuring parameters in music with a focus on the role of orchestration as a structuring force in music. We then examine how auditory scene analysis principles can serve as a basis for orchestration theory. Finally, we outline a Taxonomy of Orchestral Grouping Effects (TOGE) related to these auditory grouping principles, drawing on relevant work from music theory and music psychology. The TOGE defines perceptual processes that affect how music segments are formed on the basis of sonic and contextual properties. It also seeks to provide a more refined vocabulary and set of concepts for describing the structures resulting from perceptual grouping processes in music, which necessarily involves introducing new terminology, partially drawn from perceptual psychology and partly from orchestration treatises. We argue that a truly listener-oriented analysis must necessarily draw concepts and theoretical tools from psychology.

[1.5] For each category in the TOGE we present representative musical examples drawn from several epochs to illustrate how the perceptual principles operate to create orchestral effects. The aim here is to develop Albert Bregman's (1990) auditory scene analysis principles of concurrent and sequential grouping as tools for music analysis, as have other music theorists (Beavers 2019, 2021; Bhogal 2020; Duane 2013; Iverson 2011). Additionally, we extend the principles to segmental grouping as in Lerdahl and Jackendoff’s (1983) grouping preference rules. The aim of this listener-oriented approach is to apply the principles by which incoming sounds are perceptually structured into events, textures, streams, layers, and segments. Following the lead of Judith Lochhead’s (2005) three fundamental issues of analysis and adapting them to our own purposes, we develop theoretical concepts, terminology, and corresponding visualizations for the analytic process and apply them as tools for orchestration analysis. Lochhead notes that there are few pre-existing concepts for timbral/textural analysis (and we would add, for orchestration analysis more generally) and that analysts must theorize concepts that guide analytic observation. Therefore, we derive terms and concepts from those used to describe auditory grouping principles as they operate in music. We argue that a theorist or analyst who would engage with the role of orchestration in music-as-heard(3) must take as part of the analytic process the delineation of perceptual grouping terminology and concepts. The purpose of the terminology is not to create vocabulary for its own sake, but to define a set of terms based in perceptual research that provide a consistent framework for theorizing the process of perceptual structuring in music. This approach aligns with Dora Hanninen’s (2012) claim that music theory “establishes fundamental concepts and defines terms that generalize across pieces and applications” [3] . And consistently with Lochhead’s and James Tenney’s ([1964] 1988) approaches, we place specific emphasis on a combined aural and symbolic (score-based) analysis, which is necessarily tied to one or more specific recorded performances of a piece.

Timbre as a Structuring Force in Music

[2.1] Orchestration practice has evolved significantly from the Renaissance to today (Goubault 2009; Kreitner et al. 2007; Spitzer and Zaslaw 2004) both in terms of the range of instruments employed and the ways the instruments and instrument combinations are used to create sonic effects. Orchestration goals can be as diverse as creating new timbres through the blending of instrumental sounds, creating a trajectory of increasing instrumental power, contrasting motifs or sections, bouncing antecedent and consequent materials among instrumental groups, integrating instruments into a homogeneous texture or maximally distinguishing the melodic and rhythmic materials they carry through timbral dissimilarities, making a solo voice stand out from a dense texture, and so on. As the composer Jean-Claude Risset remarked, “Orchestration seeks not only to introduce shimmering colors, but also to underscore the organization of the musical discourse” (2004, 145).[4]

[2.2] Although scholarly discourse surrounding the role of timbre in music was relatively absent up to the 1990s, important articles, chapters, and books have begun to appear more recently (e.g., Barrière 1991; Cogan 1984; Solomos 2013). It is particularly encouraging that recent research in music theory, musicology, and perceptual psychology has shown that timbre has the potential to structure music from local to global scales and to shape the music’s emotional and aesthetic impact.[5] Nevertheless, the musical potential of timbre has received less attention in music theory than other musical parameters such as pitch and duration. Although harmony and counterpoint have significant development and exploration among theorists and composers, orchestration often seems to have been relegated to the sphere of the individual practice of composers, distinguishing their work from that of others in ways that defy theorizing (Chiasson 2010). Composer and orchestration treatise author Gardner Read remarks: “Every aspect, every facet, of orchestration is a part of creation and so defies the academic approach” (2004, xii). We argue to the contrary that perceptual principles provide a foundation for theorizing about orchestration, particularly their role in the creation of blended sonorities and local contrasts, the distinction between musical lines, and the creation of orchestral layers of different prominence.

The Contribution of Auditory Scene Analysis to Orchestration Theory

[3.1] To develop theory in relation to orchestration practice, we need first to find examples showing the use of implicit principles related to perception in orchestration treatises and scores. Such principles are “implicit” in the sense that aspects of orchestration practice are based to some extent on rules and conventions that are followed intuitively without being formalized explicitly. We propose that many aspects of orchestration practice, as revealed through orchestration treatises and scores, are related to auditory grouping principles (Goodchild and McAdams 2021). Auditory grouping processes are the basis of what has been termed auditory scene analysis. According to Albert Bregman, the perceptual psychologist who established this field of research, the “job of perception . . . is to take the sensory input and to derive a useful representation of reality from it” (1990, 3). Our brains evolved to perceive sound sources rather than collections of individual frequency components, and events produced by these sources result in auditory images―mental representations of sound entities that exhibit coherence in acoustic behavior (McAdams 1984). Although sound entities in the everyday world are usually single sound sources, in music they can involve combinations of several sources of sound—musical instruments or electroacoustic sounds. Therefore, we argue that master orchestrators have learned, and student orchestrators must learn [6] to harness this tacit understanding in order to provide “musical scenes” in which listeners can comprehend complex musical relationships through instrumental scoring. In this way, the TOGE provides a basis for a predictive theory of how grouping principles at work in orchestration lead to perceptual structuring.

[3.2] Example 2 presents the grouping processes of auditory scene analysis and the orchestral effects related to them, as well as the resulting perceptual attributes related to music. There are three main classes of auditory grouping processes: concurrent, sequential, and segmental (Bregman 1990; Goodchild and McAdams 2021; McAdams 1984). First, concurrent grouping governs what components of sounds are grouped together into musical events, which precedes the extraction of perceptual attributes of these events, such as timbre, pitch, loudness, and spatial position. Next, sequential grouping determines whether these events are connected into single or multiple streams, on the basis of which perception of melodic contours and rhythmic patterns occurs. Finally, segmental grouping affects how streams are “chunked” into musical units, such as motives, phrases, and themes. As a result, auditory grouping processes are directly involved in many orchestration practices, including the blending of instrument timbres due to concurrent grouping, the segregation of melodies based on timbral differences in sequential grouping, and call-response or echo-like exchanges through orchestral contrasts in segmental grouping.

Example 2. Auditory grouping processes and the resulting perceptual qualities and corresponding orchestral effects

[click to enlarge]

[3.3] An important principle emerges from auditory scene analysis research: perceived qualities of events or groups of events depend on how things get grouped together (Bregman 1990; see also Example 2). Accordingly, event properties such as pitch, timbre, loudness, duration, and spatial position depend on concurrent grouping. They are computed on the basis of the information that has been grouped together concurrently. Stream properties such as melodic contour and intervals and rhythmic relations depend on sequential grouping. Such relations are computed within streams and are difficult to perceive across streams (McAdams and Bregman 1979). And the formation of musical units, such as motifs, themes, and phrases, depends on their being perceptually segmented as such, setting them off as units, as hierarchically high-level, complex “events.”

[3.4] People make sense of the continuous streams of information they encounter by organizing them into discrete events as an ongoing part of everyday perception (Kurby and Zacks 2008). These everyday perceptual organization processes are evidently present in any music listening situation as well. In the case of music, the information stream is carried by the complex sound waves, created by multiple instrumental sound sources that arrive at a listener’s two eardrums. Events are organized concurrently at several timescales and are grouped hierarchically. For example, a tone can be grouped with other tones to form a melodic stream, which can be in the foreground layer of a two-layer orchestral texture. In the case of music, therefore, multiple events overlapping in time are possible. Organization is based on detecting changes in the properties of the incoming sensory information. An event is a segment of time that is perceived by a listener to have a beginning and an end. Beginnings can be signaled by sound appearing from silence. But the end of one event and the beginning of the next event can also be signaled by a sudden change in musical parameter such as pitch, timbre, or dynamics. Slow, continuous changes in these parameters may not provoke event detection and would thus be perceived as a modulation of the parameter, such as vibrato or a crescendo or a continuous change in timbre on a flute as one adjusts the orientation of the embouchure with respect to the mouthpiece.

[3.5] The writings of several music scholars are relevant to the issues at hand. James Tenney ([1964] 1988) applied concepts from Gestalt psychology to the perceptual organization of musical structure from sound, notably Koffka’s notion of the laws of organization that underlie the formation of cohesive, separable units. This process results in the formation of a grouping hierarchy of segments, which Tenney terms “temporal Gestalt-units.” Tenney and Larry Polansky’s (1980) theory of segmental grouping is based on Gestalt principles: similarity and proximity promote cohesion, whereas difference and temporal distance promote segregation. This conception has strong similarities to the grouping preference rules of Lerdahl and Jackendoff (1983), although some of the principles also apply to sequential grouping and the formation of auditory streams.

[3.6] More recently, the work of Dora Hanninen (2012) has addressed the notions of segmentation and associative organization as applied within music analysis. She proposes three domains of musical experience and discourse, which are sonic (psychoacoustic), contextual (associative), and structural (theory of structure or syntax). Hanninen’s theory is note- or sound-event-based, as is Tenney’s, and thus presumes the formation of events that populate segments. Similarly to Tenney, Hanninen proposes that sonic organization from individual musical segments to larger units is predicated on difference and disjunction. Both theories include the role of contextual factors that are relational, such as repetition, association, and categorization of segments resulting from sonic organization. The approach of the Taxonomy of Orchestral Grouping Effects is oriented toward a score-based exemplification of music-as-heard and focuses primarily on Hanninen’s sonic level, at which sonic criteria respond to psychoacoustic attributes of individual notes and place segment boundaries at local maximum disjunctions in individual dimensions, such as pitch, duration, dynamics, and timbre. The TOGE extends the organization processes to concurrent and sequential grouping, both of which, in our conception, precede the segmental level of grouping, which was the focus of Hanninen and Tenney. Let us examine the three classes of grouping principles as defined in the TOGE more generally before proceeding to detailed examples.

[3.7] Concurrent grouping operates through the auditory fusion of acoustic information into auditory events. Any given sound source has a rich spectrum with mixtures of harmonic or inharmonic frequency components and noise.[7]And yet, under normal circumstances, one usually hears not a plethora of frequencies, but a unified event when that source produces a sound. A number of acoustic cues are used by the auditory system to decide which concurrent components to group together. The most powerful cues include onset synchrony (acoustic components begin synchronously), harmonicity (acoustic components are related by a common period), and parallel changes in amplitude and frequency (McAdams 1984). Deviations from these cues may signal the potential presence of several simultaneously sounding events. This grouping process precedes the extraction of the perceptual attributes of the resulting events, such as timbre, pitch, loudness, duration, and spatial position.

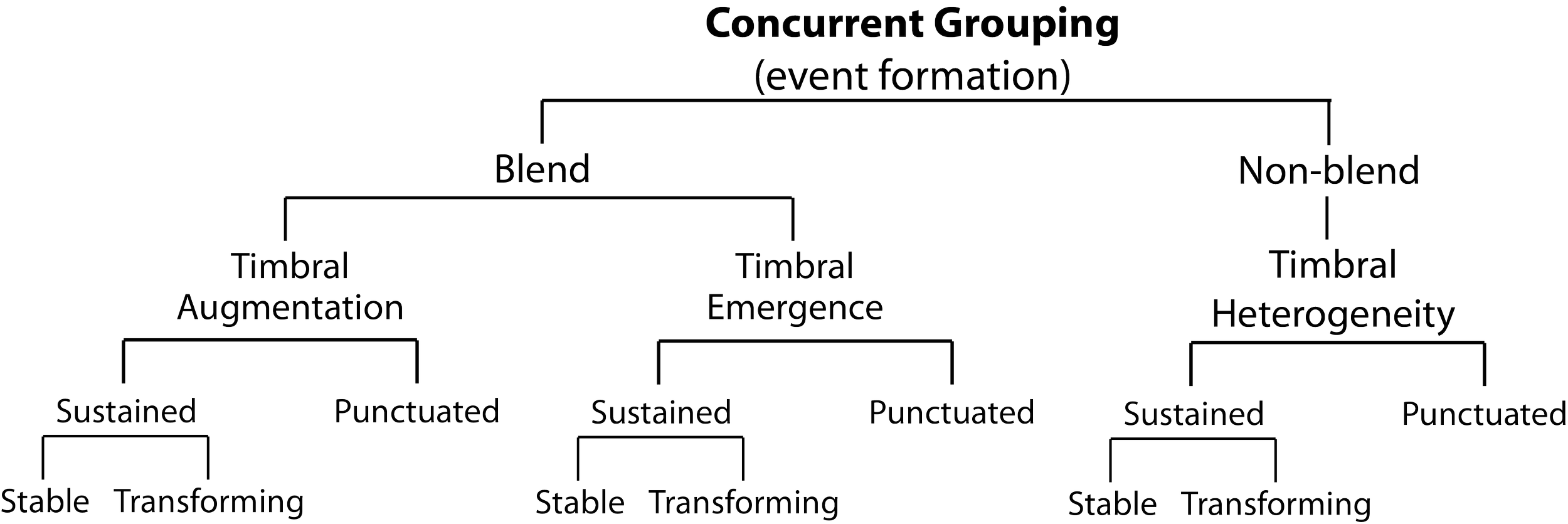

[3.8] The subtypes of the concurrent grouping category in the TOGE are derived from an idea originally proposed by Gregory Sandell (1991, 1995). The default case in music is that different instruments are heard separately. However, if sounds are blended, a new timbre arises, which is either dominated by a given instrument that is augmented by other instruments (timbral augmentation) or is less easily recognized as composed of the constituent sound sources (timbral emergence). On the other hand, if the concurrent events fulfill all of the auditory scene analysis criteria of onset synchrony, harmonicity, and parallelism of pitch and dynamics, as would be the case with many cases of instrumental doubling in orchestration, but the instruments are nonetheless heard separately, we classify the effect as timbral heterogeneity. In all three cases, the effect can be of short duration (punctuation) or more continuous, and in the latter case the instruments involved can be stable or changing over time (transforming).[8]

[3.9] Sequential grouping connects events formed by concurrent grouping into auditory streams on the basis of similarity in qualities (pitch, timbre, loudness, spatial position). Sequences of events with similar properties and which are proximal in time are organized into an auditory stream, and events with different properties are organized into multiple streams within which successive events are more similar (McAdams and Bregman 1979). Therefore, an auditory stream is a mental representation of sequences of sounds likely to have arisen from the same sound source. This process provides the basis on which the perception of rhythmic patterns and melodic, timbral, and dynamic contours is determined. In sequential grouping, successive events are integrated into a stream or a surface texture. Alternatively, they can also be segregated to form multiple streams or more loosely grouped instruments that constitute orchestral strata, which differ in perceptual prominence and thus form foreground and background layers. Again, in each of these cases, we distinguish stable and transforming instrumentation within streams and strata.[9]

[3.10] Segmental grouping involves the hierarchical “chunking” of event sequences into musical units that one usually associates with motifs, cells, and phrases (Deliège 1987), but it can also apply to groups of streams or layers in the creation of section boundaries (Deliège 1989). Segmentation is provoked by sudden changes in a number of musical parameters such as loudness, pitch register, timbre, and surface texture. In orchestration, segmentation into musical units often involves various kinds of timbral contrasts or timbral progressions over longer timespans, from several seconds to many minutes. Through extensive orchestration analysis, [10] we have identified several subcategories of contrasts, including (1) timbral shifts with musical patterns being passed from instrument to instrument; (2) timbral echoes of one instrument by another, simulating distance; (3) antiphonal contrasts with call-and-response patterns between different instrumental groups; (4) timbral juxtapositions that involve contrasts at a local level (and don’t fit within any of the other categories); and then (5) larger-scale contrasts signaling section boundaries in concert with changes in other musical parameters such as register, musical texture, and dynamics. It is important to emphasize that we are focusing on perceptual segmentation at the sonic level in Hanninen’s (2012) theory, not more general types of segmentation at associative or structural levels based on relational properties such as repetition, association of materials, pitch-class set distinctions, and harmonic considerations, to name just a few, or to higher-level theoretical issues of interpretation and representation of musical meaning.[11]

[3.11] Hanninen’s notion of disjunction underlying segmentation at the sonic level involves salient difference, edge detection, object recognition, and boundary formation. The auditory dimensions she considers as note attributes, whose disjunction can contribute to segmentation, include pitch, attack-point, duration, dynamics, timbre, and articulation. Her conception seems to presume concurrent and sequential grouping, focusing on segmental grouping. In her words, “within each sonic dimension, edge detection and stream segregation support object recognition for successive, and simultaneous, events, respectively” (23–24). [12]

[3.12] Let us now examine in more detail the nature of these different classes of grouping processes and how they relate to orchestration. We will do so through the taxonomy representing subcategories of the three main types of auditory grouping principles described above. The taxonomy is organized from the smallest, local-level units (event formation from perceptual fusion or blending) through the connection of events into auditory streams, surface textures and layers, and finally to segmentation of smaller- and larger-scale units through orchestral contrasts.

Taxonomy of Orchestral Grouping Effects (TOGE)

Concurrent Grouping

[4.1] In the case of orchestration, we are interested in cases in which two or more concurrently sounding instruments are fused perceptually, which is usually referred to in orchestration treatises as “blend” in English, “fondu” or “mélange” in French, and “Verschmelzung” in German (e.g., Adler [1982] 2002; Koechlin 1954–1959, vol. III; Schneider 1997, respectively). The auditory grouping effect of blend arises from the basic technique of combining instruments, referred to as the “doubling” or “coupling” of instruments at the unison or at particular pitch intervals (drawing on principles of harmonicity and parallel changes of pitch). Doubling and coupling also usually imply rhythmic synchrony. However, as we will see, not all couplings result in blending of the component instruments.[13] The fusion process creates the illusion that the composite sound originates from a single source, creating “virtual source images” (McAdams 1984), “phantasmagoric instruments” (Boulez 1987), or “chimeric percepts” (Bregman 1990). And the perceived timbre depends on which acoustic components have been grouped together concurrently. Therefore, in orchestration practice, blending is often used to create new timbres.

[4.2] The most effective concurrent grouping principles include onset synchrony, harmonicity, and parallel changes in dynamics and pitch. These principles are part of everyday perceptual processing—the aim of which is to understand what is happening in the world—and are likely to be relatively independent of musical style or culture. Consonant intervals (unisons, octaves, fifths), corresponding approximately to frequency ratios found between lower partials in the harmonic series, are more likely to fuse than are imperfect consonant and dissonant intervals (Lembke and McAdams 2015). Sound components that start within a synchrony window of about 30–50 milliseconds are more likely to fuse together. For example, sound components that are asynchronous by more than 30 milliseconds can affect timbre judgments given that timbre depends on which frequency components get grouped together (Bregman and Pinker 1978). Parallel changes in sound level (dynamics) can cause components in different frequency regions to fuse together (Hall, Grose, and Mendoza 1995), and similarly, changes in frequency that maintain harmonic ratios, such as vibrato, cause components to fuse together and to separate from components that are changing in different directions (McAdams 1989). These perceptual results are based primarily on coherent changes within single events (i.e., a melodic line). However, when harmonicity and onset synchrony are maintained in sequences of events that change in parallel in pitch and dynamics, as in many passages in Maurice Ravel’s Boléro (1928), the fusion effect is strengthened, demonstrating an influence of coherent change over time on concurrent grouping, as noted by Bhogal (2020). When Ravel introduces multiple sources playing the main melody, they are at pitches corresponding to the harmonic series, which is maintained strictly as the melody is played,[14] and they start and stop at the same time over the whole sequence. This strict parallelism of pitch intervals is often relaxed in other pieces (similar motion) to keep instruments in the same key and respect the harmonic progression. If the motion becomes oblique or contrary, the tendency is of course toward perceptual separation of the parts (see Huron 2016, chap. 6).

[4.3] These concurrent grouping principles are all important to achieve a musical blend, and the more they all converge, the stronger the degree of fusion (Bregman 1990; McAdams 1984; McAdams and Bregman 1979). However, blend is not an all-or-none phenomenon. One can have various degrees or strengths of blending along a continuum from completely fused to completely segregated. The opposite of blend is concurrent segregation, where simultaneously sounding notes are heard independently. Deviations from onset synchrony, harmonicity, and parallel motion can inhibit fusion. However, even when these principles are satisfied, they do not suffice in and of themselves. Timbral similarity is also crucial in terms of both spectral shape and temporal envelope shape.[15]

[4.4] As shown in Example 3, concurrent grouping principles in the TOGE affect whether a combination results in blend or non-blend. We further specify two types of blend in terms of the timbral result: augmentation and emergence. Timbral heterogeneity is the result of non-blend when the pitch and rhythmic parameters are strongly coupled across instruments, but the timbral characteristics do not result in perceptual fusion. For each of these categories, the phenomenon is either sustained over time (a musical phrase) or is a very short punctuation (a chord or accent, usually a quarter note or shorter in duration). For sustained cases, the instrumentation is either stable over a sequence of blended or non-blended events or evolves over time, which we refer to as transforming. In the following sections, we provide more detailed accounts and examples of augmentation, emergence, and heterogeneity.

Blend

Timbral Augmentation

[4.5] Timbral augmentation involves fusion in which one dominant instrument is embellished or colored by a subordinate instrument or group of instruments.

[4.6] Timbral augmentation (sustained, stable). A striking example involves the English horn doubled at the unison by two solo celli in the first movement of Debussy’s La Mer (1903–5) (Example 4). The English horn timbre (dark red box) dominates but is given texture and weight by the embellishing celli (lighter pink box). Audio Example 1 presents the English horn alone (1a), then with the two celli (1b), and finally the full context (1c).[16] Note the change in timbre with the addition of the celli, but also that the degree of fusion of the English horn and celli in the foreground is enhanced when the background strings are added. This change in the full context indicates that the blending of specific instruments may be affected by what else is occurring in the music. In this example, the instrumentation of the blend remains fixed, and we refer to this as stable timbral augmentation.

Example 4. Timbral augmentation (sustained, stable): Debussy, La Mer, i, mm. 122–31

[click for audio examples and score]

[4.7] Timbral augmentation (sustained, transforming). This device involves situations in which the dominating instrument(s) remain(s) fixed while the embellishing parts change when an instrument is added or removed over the course of a phrase. In this case, the timbre of the dominant instrument is continuously modified over time by the changes in embellishing instruments. A powerful example of this phenomenon, noted by Gregory Sandell (1991, 63), is shown in Example 5. The opening of the Overture to Wagner’s Parsifal (1882) contains a passage in which the dominant first and second violins (dark red box) are augmented throughout by the celli (lowest lighter pink box) at the unison (high instrumental register), an orchestration technique which functions to provide added tension, particularly with the crescendo. This combination is then progressively augmented by the addition of clarinet, English horn, and then oboe (upper lighter pink box), still in unison doubling, thickening and enriching the sounds with reed instruments up to the middle of the passage. Thereafter, the woodwinds are removed in reverse order, accompanying the decrescendo. The timbral waxing and waning follows the overall melodic contour (visible in the upper pink box). This exemplifies an important musical effect that is only achieved through orchestration. Note that the dominant instrument (the violins) remains constant, and it is the combination of embellishing instruments that changes over time. Audio Example 2 first presents the violins alone (2a) and then the full context (2b).

Example 5. Timbral augmentation (sustained, transforming): Wagner, Parsifal, Overture, mm. 20–25

[click for audio examples and score]

[4.8] Timbral augmentation (punctuated). Blend of the punctuation type refers to a short event, usually played tutti, in which a listener does not have enough time to parse the constituent elements. Example 6 presents a punctuated timbral augmentation in which the brass family dominates and is augmented by strings and woodwinds in a series of punctuated chords from the third movement of Sibelius’s Symphony no. 5 (1915, revised 1916–19) (Audio Example 3). Note that the timpani stand out in timbral heterogeneity in the last two chords in this performance due to onset asynchrony.

Example 6. Timbral augmentation (punctuated): Sibelius, Symphony no. 5, op. 82, iii, mm. 474–482

[click for audio examples and score]

Timbral Emergence

[4.9] Timbral emergence occurs when the fusion of different instruments results in the synthesis of a new timbre that is identified as none of its constituent instruments, creating a new sonority (Sandell 1995). Kendall and Carterette (1993) have shown with dyads of instruments that the stronger the perception of blend is, the more difficult it is to identify the constituent instruments, as they are no longer accessible as individual sounds from which the properties that allow identification of the instrument can be extracted. Another principle that affects perceptual fusion in timbral emergence is related to the fact that dense frequency spectra, such as those created by tight pitch clusters, are not analyzable perceptually because too many frequency components from different sources fall in the same auditory channels (McKay 1984; Noble 2018). Spectral density has been employed a great deal in sound mass music, such as György Ligeti’s Atmosphères (1961) or Krszystof Penderecki’s Threnody to the Victims of Hiroshima (1960).

[4.10] Timbral emergence (sustained, stable). In Example 7, the English horn is doubled at the octave by a muted trumpet (red boxes) in a passage from the first movement of Debussy’s La Mer (1903–5). The blend of the strident trumpet and rich English horn has an emergent quality similar to a reed organ, and indeed with this instrumental combination Debussy may have been trying to replicate the foghorn of a ship at sea. Audio Example 4 presents the English horn and trumpet combination by itself and then the full context.

Example 7. Timbral emergence (sustained, stable): Debussy, La Mer, i, mm. 6–17

[click for audio examples and score]

[4.11] Timbral emergence (sustained, transforming). In Example 8, the opening measures of the third movement of Schoenberg’s Five Pieces for Orchestra, op. 16 (1949), entitled “Farben” (colors in German), involve an oscillating timbral “modulation” that elides smoothly between different groups of blended instruments on the same five-voice chord (Audio Example 5). The timbral modulation occurs at a faster rate than the seven chord changes over these eleven measures. The first four voices alternate every half note between flute 1, flute 2, clarinet, and bassoon on the one hand and English horn, muted trumpet, bassoon, and muted horn on the other (red boxes), and the fifth voice alternates every quarter note between viola and contrabass. As Charles Burkhart notes,

The changing-chord organism, then, reveals a very tight and solid pitch construction, but one that is so simple and devoid of pitch embellishment that, by itself, it could not sustain interest. The dimension of the work that holds us from moment to moment is, of course, color. In traditional orchestral music, instrument changes are generally much slower than changes of pitch [. . .]. In Farben we have the reverse: the changes of instrument (therefore of color) are generally faster than the changes of pitch. (1973, 151)

In a footnote on the score,[17] Schoenberg specifies that the change between chords should be done so gently that there is no accentuation of entering instruments; the change should only become noticeable by the different emerging color, essentially defining the notion of continuous timbral modulation. This kind of timbrally modulated harmonic sonority as an extended auditory event became quite common in the spectral music of composers such as Gérard Grisey (Partiels, 1975) and Tristan Murail (Gondwana, 1980).

Example 8. Timbral emergence (sustained, transforming): Schoenberg, Five Pieces for Orchestra op. 16, iii, mm. 1–11

[click for audio examples and score]

[4.12] Timbral emergence (punctuated). Example 9 shows a single tutti chord giving rise to timbral emergence in the fourth movement (Marche au supplice [March to the scaffold]) of Berlioz’s Symphonie fantastique (1830) (Audio Example 6). Due to the short duration, there is not enough time to aurally analyze the instrumental constituents of this final tutti chord, which symbolizes the fatal blow of the guillotine that brutally interrupts the idée fixe representing a final thought of love. The classification of Examples 6 and 9 as augmented and emergent, respectively, depends on the synchrony and balance among instruments: synchrony strongly affects blend and balance can affect whether certain instruments dominate. The analyst must decide upon listening whether some instrument or family dominates and is identifiable or not.

Example 9. Timbral emergence (punctuated): Berlioz, Symphonie fantastique, iv, m. 17

[click for audio examples and score]

No-blend

Timbral Heterogeneity

[4.13] Timbral heterogeneity occurs when the parts written for instruments satisfy auditory grouping principles (i.e., playing synchronously, in harmonic relations, and with parallel motion), but do not blend completely and some instruments or groups of instruments are consequently heard independently due to their timbral dissimilarity (e.g., differences in attack quality, formant structure, and brightness/darkness). The analyst must decide if they can hear multiple concurrent sonorities. In some cases, one or more instruments may stand out while others are blended. In other cases, a listener might distinguish separate groups of blended instruments. As with all grouping effects, blend is not all-or-none and analysis should include an estimate of blend strength, which can depend on performance nuances such as timing, tuning, and balance.

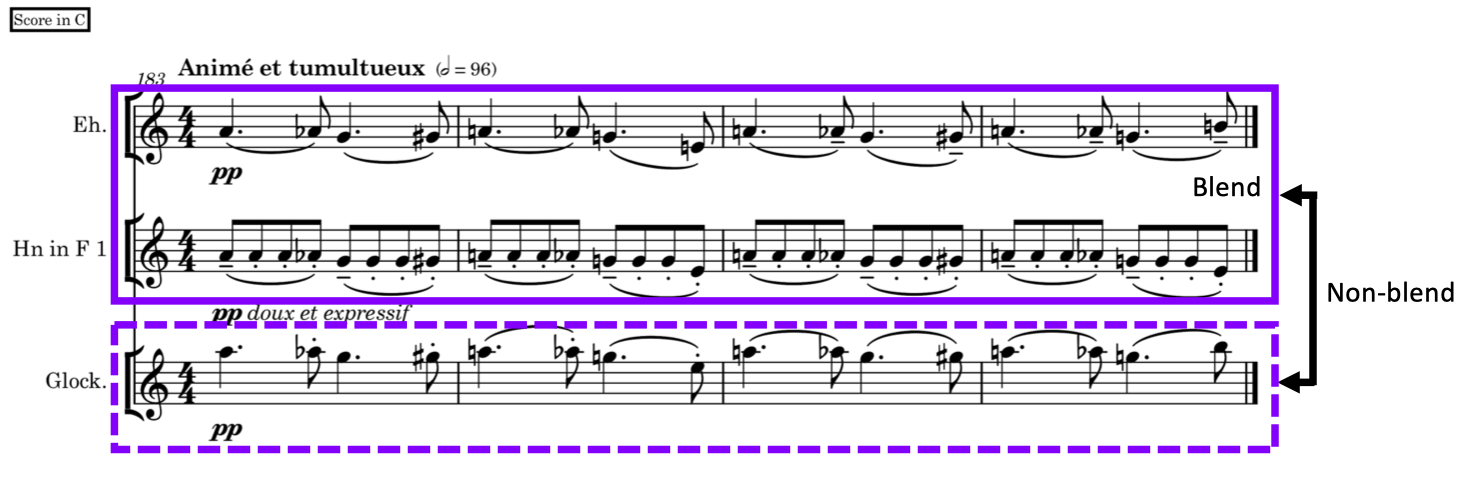

[4.14] Timbral heterogeneity (sustained, stable). A classic example of this effect is the doubling of an instrument with the glockenspiel two or three octaves above. As Forsyth notes concerning the glockenspiel, “Its main use is to ‘brighten the edges’ of a figure or fragment of melody in conjunction with the upper octaves of the orchestra. Strictly speaking, one may say that it combines with nothing. And this is its virtue” (1935, 62, emphasis added). As shown in Example 10, drawn from the third movement of Debussy’s La Mer (1903–5), the glockenspiel doubles the unison English horn and horn line three octaves higher. Here, the repeating eighth notes in the horn blend with and emphasize those of the more sustained English horn (solid purple box) until they split on the last eighth note at different pitches. The glockenspiel does “brighten the edges” of the blend between English horn and horn. However, it is indeed heard separately (as indicated by the dashed purple box) due to: (1) the different temporal envelopes of sustained and impulsive sounds; (2) its very high spectral content that does not overlap with the other instruments; and (3) its inharmonicity compared to the harmonicity of English horn and horn. Audio Example 7 presents first the English horn alone (a), then first adds the horn (b), then the glockenspiel (c), and then the rest of the orchestra in sequence (d).

Example 10. Timbral heterogeneity (sustained, stable): Debussy, La Mer, iii, mm. 183–186

[click for audio examples and score]

[4.15] Timbral heterogeneity (punctuated). Example 11 is from the last two bars of the first movement of Tchaikovsky's Nutcracker Suite op. 71a (1892). In the two final tutti chords in Audio Example 8, the woodwinds and horns (solid purple box) are segregated from the triangle and pizzicato violins and violas (dashed purple box), even though they sound synchronously with each other. Each group is blended, but the inharmonicity of the triangle and the more quickly damped impulsive amplitude envelope of the triangle and pizzicato strings set them apart from the wind instruments.

Example 11. Timbral heterogeneity (punctuated): Tchaikovsky, Nutcracker Suite, op. 71a, i, mm. 181–182

[click for audio examples and score]

Sequential Grouping

[5.1] Sequential grouping concerns how successive events or temporally overlapping groups of successive events are perceptually connected to one another. The formation of auditory streams, surface textures, or orchestral strata is traditionally understood in orchestration practice as either contrapuntal writing (the creation of co-equal parts) or orchestral layering (the creation of hierarchical parts, i.e., foreground and background layers), and everything in between. The basic principle of auditory stream formation is that events that are temporally proximal and similar in their auditory qualities, such as pitch, loudness, timbre, and spatial position, will tend to be grouped sequentially (see Bregman 1990, chap. 2; Huron 2016, chap. 6; McAdams and Bregman 1979; Moore and Gockel 2002, for reviews). It is important to note that timbre covaries with pitch, playing effort, and articulation in musical instruments and so cannot be considered independently: changing the pitch or the musical dynamic also changes the timbre (McAdams and Goodchild 2017a).

[5.2] Discontinuities created by sudden changes in these auditory qualities cause them to be disconnected. However, the auditory system can connect non-successive events that have similar qualities. Therefore, if a violinist alternates rapidly between pitch registers on a note-to-note basis, successive notes are separated, but are connected to notes of similar register, thereby forming two separate or segregated streams in counterpoint with each other. This technique of pseudo-polyphony or compound melody was used extensively starting in the Baroque era by composers such as Georg Philipp Telemann and Antonio Lucio Vivaldi. Note that the notions of parts or lines, as in contrapuntal part-writing, may or may not result in a single stream per part. And we know that melodies and rhythms—the succession of pitch intervals and interonset intervals—belong to, or are computed within, auditory streams, not across them (Dannenbring and Bregman 1976). Melody and rhythm perception depend on how things are grouped sequentially: both are properties of streams and can be affected by timbre-based grouping.

[5.3] Contrasts in the timbral properties of different instruments, particularly those related to sound color derived from the shapes of the sounds’ frequency spectra, can also cause stream segregation. Prout refers to this as “the contrast of instruments employed simultaneously” (Prout 1899, 115), as opposed to successive contrasts of instrumentation/orchestration. The degree of segregation between parts can be predicted by their perceived timbral difference, including differences in the shape of the frequency spectrum and temporal fluctuations in the amplitude envelope (Bey and McAdams 2003; Iverson 1995; Reuter 1997). Interestingly, timbre is a more important cue for stream segregation for nonmusician listeners than musicians (Marozeau, Innes-Brown, and Blamey 2013). Jeffrey DeThorne’s (2014) notions of “colorful plasticity” and “equalizing transparency,” while never defined in acoustic terms, attempt to theorize the concept of “orchestral clarity,” that is, some degree of segregation that allows one to hear the individual parts clearly. Similarly, concerning the formation of orchestral layers, Charles Koechlin (1954–1959) introduces the concepts of volume (auditory size or extensity) and intensité (intensity, not necessarily merely related to loudness, but perhaps also to a kind of inherent “force” in a sound) (Chiasson 2010; Chiasson and Traube 2007). These properties vary as a function of the instrument, as well as the register and dynamic at which it is playing. Instruments that are similar in intensity and extensity are considered to be équilibrés (balanced) and can be grouped into orchestral layers, whereas instruments presenting differences in these properties are more likely to be in separate layers.

[5.4] One rule of counterpoint is to avoid crossing parts in pitch, because listeners tend to group notes on the basis of pitch proximity. However, this problem can be overcome if the two parts, such as a duo of clarinet and violin, have sufficiently different timbres (Gregory 1994; Tougas and Bregman 1985). But segregation can also be achieved with sounds from a single instrument if they have very different timbral characteristics, as one might find in solo violin pieces by Niccolò Paganini in which bowed and plucked sounds form separate streams in counterpoint with each other. In the words of Charles Koechlin: “A melody interleaved with an accompaniment of the same timbre will be heard easily only if there is a gap between this melody and the parts that accompany it. On the other hand, with different timbres, this gap is not necessary” (Koechlin 1954–1959, 22).[18] Note that auditory stream formation theory posits that the events cohering into a stream can be detected and followed over time, and in the cases mentioned here, it is the timbral similarity that allows this tracking over time. This has been termed the “timbral differentiation principle” in part writing by David Huron (2016, 114–6).

[5.5] Sequential grouping results in the formation of one or more auditory streams, as well as looser integration of musical parts into complex textures or orchestral layers. As shown in Example 12, in the TOGE we distinguish between the sequential integration into streams or surface textures and the segregation of temporally overlapping musical events into co-equal streams or stratified orchestral layers. Again, the instrumentation is either stable or transforming over the passage in question. The following sections provide more detailed accounts and examples of these phenomena.

Integration

[5.6] We distinguish two types of sequential grouping integration: stream integration and integration of a surface texture. In stream integration, consistent timbre, register, and dynamics across a sequence of notes helps them to be connected perceptually into an auditory stream. Integration into a single stream also holds for groups of instruments playing synchronously and in parallel at harmonic intervals (i.e., homophonically) if they are perceptually fused into a virtual source image, as in most blends that are sustained over a succession of pitches. The classic example of this is Maurice Ravel’s building of a complex sonority with different instruments on the pitches of the harmonic intervals in Boléro mentioned above. The integration of a surface texture occurs when two or more instruments have different material—contrasting rhythmic figures and/or pitch material—but are integrated perceptually into a single surface texture; the term “texture” is used here more in its meaning of the consistency of a surface than an aggregation of layers. It is perceived as being more than a single instrument, but the instruments do not clearly separate into distinguishable and trackable streams. As a result, this is a looser perceptual grouping than stream integration.

Stream Integration

[5.7] Stream integration (stable). Example 13 is from Ravel’s (1922) orchestration of Mussorgsky’s Pictures at an Exhibition, vi (1874). The musical stream in Audio Example 9 is formed on the basis of small changes in pitch, consistent dynamics, and a similar blended timbre across the whole passage. The consistent timbral blend creates the perception of a sustained musical stream. Although the synchrony, harmonicity, and pitch parallelism create the timbral blend at each note, with woodwinds augmenting the string timbre, it is the continuity of pitch, timbre, and dynamics from note to note that ensures the stream integration.

Example 13. Stream integration (stable). Ravel’s orchestration of Mussorgsky’s Pictures at an Exhibition, vi (“Samuel Goldberg and Schmuÿl”), mm. 1–8

[click for audio examples and score]

[5.8] Stream integration (transforming). Example 14 is drawn from a contemporary piece by Roger Reynolds, The Angel of Death for solo piano, ensemble, and computer-generated sounds (1998–2001). All of the large-scale thematic materials of the piece were composed for both solo piano and a 16-instrument ensemble. In the case of the fifth theme, only the ensemble version appears in full; the initial 10 measures are shown in Example 14. Touizrar and McAdams (2019) note that the aim of the ensemble version was to introduce additional perspective with respect to the piano version by introducing changes in timbre made possible by the instruments and their combinations. The melodic progression is highlighted in Example 14 with colored boxes. However, instead of passing from instrument to instrument, the passage has many overlapping instruments, so the melodic sequence is of continuously varying blended timbres. The vertical lines indicate blends and are examples of short passages of transforming timbral augmentation and emergence. The passage moves from violins and vibraphone, joined by clarinet, to marimba joined successively by viola then cello, to clarinet and xylophone, to xylophone and flute, and so on. It is the registral and gestural continuity, as well as the relative timbral proximity, that integrates the successive parts into a musical stream. Audio Example 10 presents first the piano version (a) and then the ensemble version (b) in order to appreciate the additional timbral sculpting that occurs.

Example 14. Stream integration (transforming): Reynolds, The Angel of Death, S section, mm. 364–373

[click for audio examples and score]

Integration of Surface Textures

[5.9] Surface texture (stable). Example 15 is drawn from Bedřich Smetana’s symphonic poem Die Moldau (1880). In mm. 185–186, the flutes play an oscillating pattern in sixteenth notes that is initially perceived as a stable stream integration, but they are joined in m. 187 by the clarinets, which weave through this pattern in triplet eighth notes creating a surface texture. This texture is meant to represent symbolically the surface of the river Moldau shimmering in the moonlight. Audio Example 11 presents this texture alone (a) and then in the full context with horns, harp, and strings (b). Note that when this texture is heard on its own, it is easier for the listener to pick out pieces of individual streams played by the flutes and clarinets, whereas the streams integrate into a surface texture in the full context as a middleground layer, which will be described in the following section.

Example 15. Surface texture (stable): Smetana, Die Moldau, mm. 185–194

[click for audio examples and score]

[5.10] An example of surface texture (transforming) can be found in Smetana’s Overture from The Bartered Bride (1886). In Example 16, there is rhythmic synchrony in rapid eighth notes with distinct pitch contours in the different string parts, which are progressively joined by clarinets, oboes, and then flutes. In spite of (or perhaps because of) the rhythmic synchrony, it is again difficult to follow any given instrument and a complex surface texture arises that evolves in tone color (Audio Example 12).

Example 16. Surface texture (transforming): Smetana, The Bartered Bride, Overture, mm. 89–99

[click for audio examples and score]

Segregation

[5.11] We distinguish two main types of sequential grouping segregation in orchestral music: the perceptual separation of individual “voices” of equal prominence (stream segregation) and groupings of instrumental parts into orchestral layers that are different in prominence (stratification).

Stream Segregation

[5.12] We define stream segregation as involving two or more clearly distinguishable voices (i.e., integrated streams) with nearly equivalent prominence or salience. The different instrument parts must be co-equal and scored as independent, often contrapuntal, melodic lines with rhythmic independence. Normally segregation occurs with individual instruments, although strongly fused instrument pairings or groupings can also form an individual stream, i.e., a coupling of two or more different instruments can blend into a “virtual voice” and form a single stream that is then segregated from some other instrument or blended group of instruments in a different stream.[19] As previously, we distinguish stable and transforming stream segregation situations.

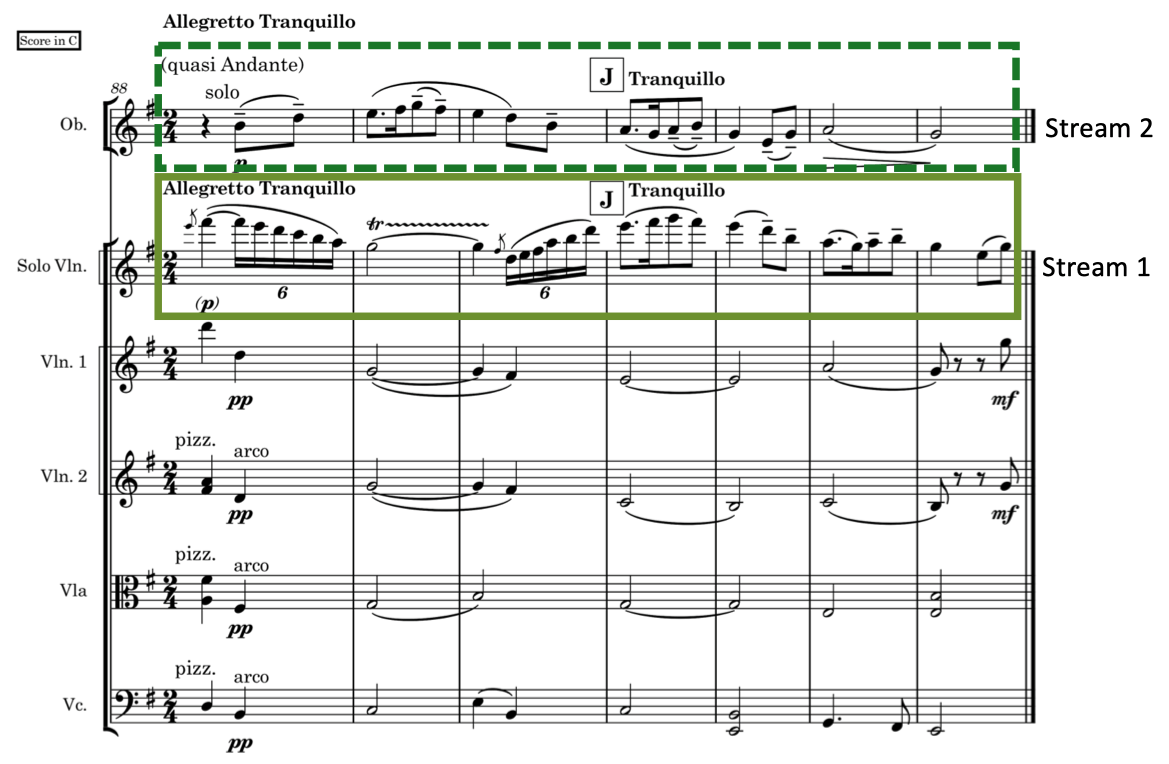

[5.13] An instance of a stream segregation (stable) of two instruments is found in Ralph Vaughan Williams The Lark Ascending (1920) (Example 17). Although the violin is the soloist (solid olive-green box), Vaughan Williams adds a complementary solo passage for the oboe (dashed green box), and so the oboe and solo violin now have equal prominence in two-voice counterpoint (which also occupies a foreground position with the string sections forming a background; see Stratification of Orchestral Layers below). The registral and rhythmic differences are highlighted by a timbral difference between the delicate crystalline clarity of the violin and the plaintive nasality of the oboe, which enhances the registral separation and rhythmic contrast to ensure their distinguishability. Although these two parts would most likely segregate if played by the same instrument, note first that timbre covaries with pitch register and second that the additional timbral difference likely enhances the segregation, as Fischer et al. (2021) have demonstrated experimentally. Audio Example 13 first presents the two instruments alone (a), followed by the full context with the background harmony provided by the string sections (b).

Example 17. Stream segregation (stable) of co-equal single-instrument lines: Vaughan Williams, The Lark Ascending, mm. 88–94

[click for audio examples and score]

[5.14] Example 18, taken from Alexander Borodin’s In the Steppes of Central Asia (1880), gives another example of stable stream segregation, but with multi-instrument fused streams. One stream is formed of flutes and violins each in octave doubling (solid olive-green boxes). The other stream comprises two bassoons and horns 1 and 3 in unison (dashed green box). In the background (see Stratification of Orchestral Layers, below) are sustained harmonies in the other woodwinds and horns 2 and 4, punctuated by pizzicati in the other strings (Audio Example 14). Note the timbral affiliation within each stream of the higher-register (flutes, violins) and lower-register (bassoons, horns) instruments. Interestingly, both streams result from the blending of instruments from different families. These blend pairings occur often in the orchestral repertoire and are most likely due to strong overall spectral similarity of the two instruments. Again, the registral and rhythmic differences are enhanced by the timbral differentiation.

Example 18. Stream segregation (stable) of blended streams: Borodin, In the Steppes of Central Asia, mm. 210–218

[click for audio examples and score]

[5.15] Stream segregation (transforming) is represented in Example 19 by a passage from the fourth movement (Madrid) of Stravinsky's Quatre Etudes (1952). In Audio Example 15, one stable stream is formed by woodwinds (solid olive-green box), whereas the brass stream transforms in passing from three horns to one horn and four trumpets (dashed green boxes). The pitch ranges of the two composites are partially overlapping, and there is significant rhythmic synchrony, but the different emerging timbral qualities and pitch contours contribute to their segregation.

Example 19. Stream segregation (transforming) of blended streams: Stravinsky, Quatre Etudes, iv (Madrid), mm. 93–94

[click for audio examples and score]

Stratification of Orchestral Layers

[5.16] In denser orchestral textures, musical perspective is sometimes achieved by the organization of materials into layers of differing perceptual prominence.[20] Stratification occurs when two or more different layers of musical material are separated into more and less prominent strands. Most often one hears foreground and background, but at times a middleground is also present.[21] Stratified layers often have more than one instrument in at least one of the layers.

[5.17] The main perceptual issues in orchestral stratification concern the musical parameters that determine: (1) which parts are grouped together; (2) the degree of separation between groups; and (3) the relative perceptual salience of a layer that leads a listener to hear it as foreground, background, or middleground. Many different musical parameters can be used to group instrumental parts into layers and to distinguish among the different layers. These parameters include register, dynamics, articulation, rhythmic texture, and, of course, choice of instruments. Timbral similarity and difference can act as cohesive and dividing forces, respectively. And remember that the timbre of sounds produced by a given instrument covaries with pitch, dynamics, and articulation. Timbre is thus necessarily involved even when it seems that these other parameters are primarily responsible for the grouping.

[5.18] Koechlin’s notion of equilibrium or balance is relevant to determining whether orchestral layers form and which are “in front,” or perceptually foregrounded (1954–1959, vol. 1, 223; vol. III, 1): differences in extensity (auditory size) and intensity (force) lead to separation, and similarities lead to grouping. In his conception, the perceptual salience that conditions whether a layer is heard in the foreground is related to two other notions that are derived from extensity and intensity. The first is transparence (transparency), which corresponds to a timbre’s ability to allow other timbres played concurrently to be heard. The transparency of a sound is high if the extensity is large and the intensity is weak. Inversely, transparency is lower if the extensity is thin and the intensity is strong. So, a large, weak sound (like a clarinet in the low to medium register) is more transparent and would be relegated to the background, whereas a thin, intense sound (like the oboe) is opaquer and would tend to occupy the foreground (Chiasson and Traube 2007). Koechlin uses these notions to explore orchestration choices in melody and accompaniment writing (1954–1959, vol. 3, 30–206).

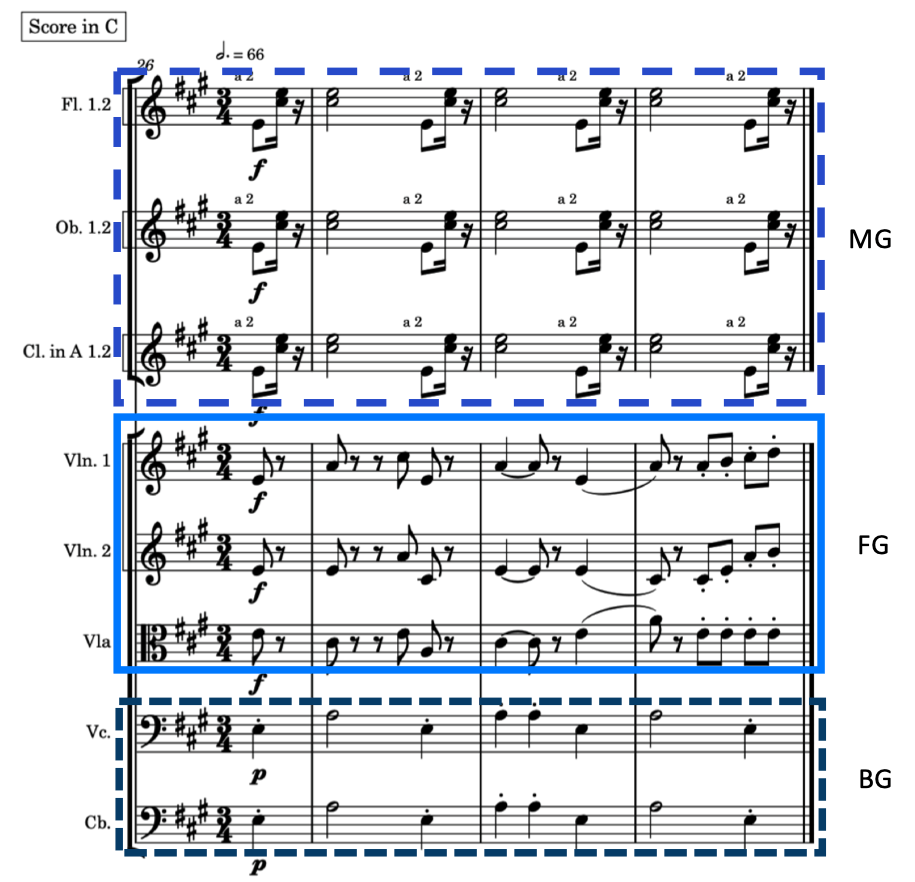

[5.20] Stratification (stable). A three-layer, stable stratification can be found in the second movement of Mahler’s Symphony no. 1 in D major (1884–88) (Example 20, Audio Example 16). The punchy rhythmic violins and violas playing at forte occupy the foreground (FG: solid bright blue box) with background celli and contrabasses providing a subtle mf rhythmic emphasis (BG: short-dashed dark blue-green box). The high woodwinds play a repeating pattern at forte in the middleground (MG: long-dashed blue box). One factor that separates the layers is register, with high woodwinds, mid-register strings, and low strings. What gives prominence to the upper strings is the crunch of forte staccato, drawing attention to the main melody. Although the woodwinds are in the highest register, they don't have the same power as the upper strings and also have a repeating pattern, which doesn't hold attention as well (Taher, Rusch, and McAdams 2016).

Example 20. Stratification (stable) with three layers: Mahler, Symphony no. 1, ii, mm. 26–31

[click for audio examples and score]

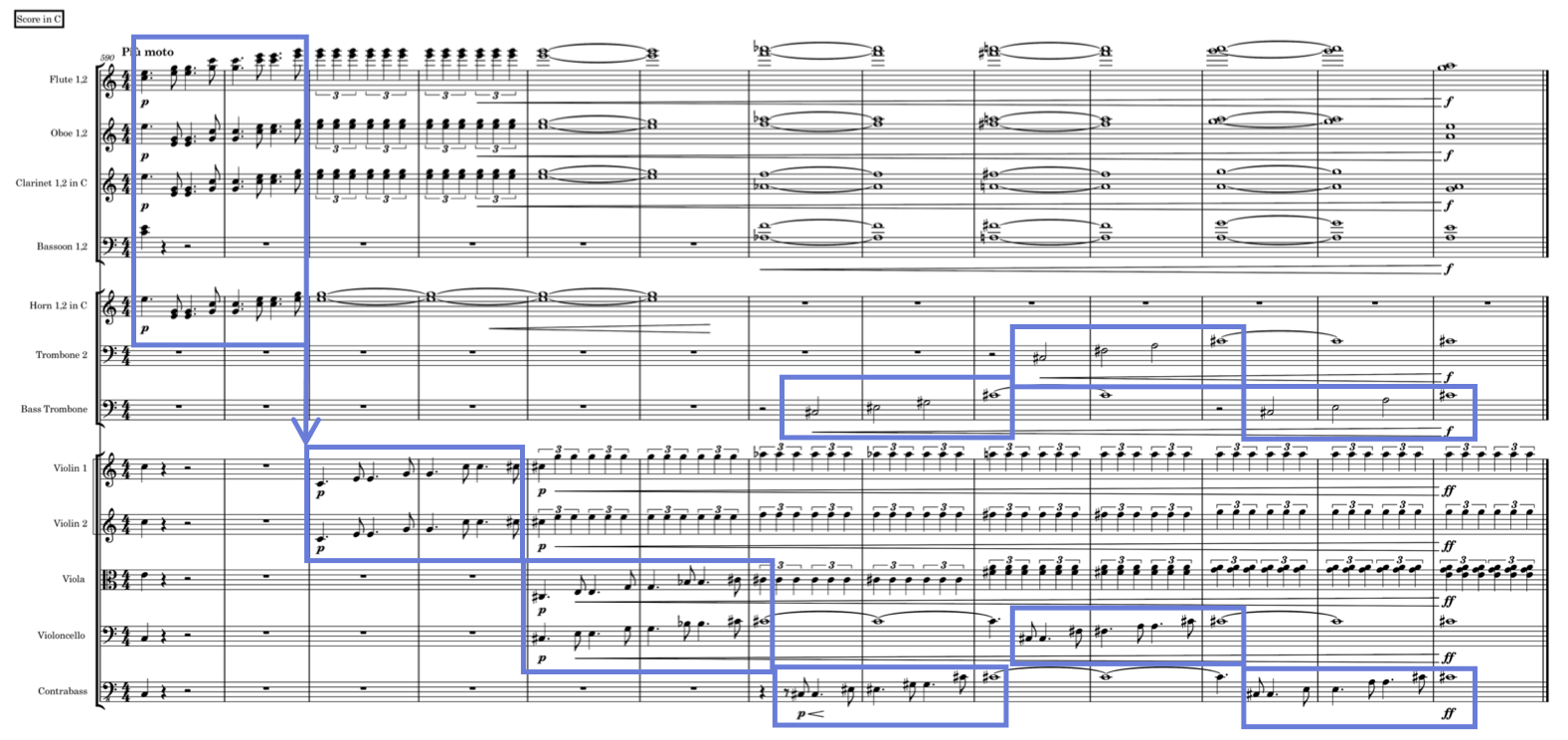

[5.21] Stratification (transforming). A passage early in Borodin’s In the Steppes of Central Asia (1880) has three layers (Example 21). The foreground contains sustained decrescendo notes moving through different timbres (solid bright blue box), the middleground has pizzicati alternating between celli on the beat and violas off the beat (long-dashed blue box), and the background is composed of a high blended cover tone on E6 and E7 in the violins, held throughout (short-dashed dark blue-green box) (Audio Example 17). The foreground timbral pattern moves from an octave blend on E2 and E3 in the horns to E5 in the oboe to a four-instrument blend on A4 and E5 with A clarinets doubled at the octave by the flutes, and then back to the oboe E5. This pattern is repeated three times, although the last one is elongated, and all instruments attack together after the third sounding of flutes and clarinets. It is the timbral change that makes this stratum perceptually salient. The transformation here is a timbral shift pattern (see the timbral contrasts section below) that occurs in the foreground. We have found other cases of transforming stratification in which instruments move between layers, such as coming to the fore from a background position and then receding again. In the present case, the addition or reduction or change of instrumentation, as well as the perceived change of coloration, occurs within one orchestral stratum.

Example 21. Stratification (transforming) in three layers: Borodin, In the Steppes of Central Asia, mm. 27–42]

[click for audio examples and score]

[5.22] It is important to emphasize the notion of grouping strength as it pertains to both concurrent and sequential grouping categories. Acoustic components are fused into events, and under appropriate conditions of onset synchrony, harmonicity, and parallel motion in pitch and dynamics, sounds produced by two or more instruments can be blended together. Blend is not an all-or-none phenomenon and slight departures from the concurrent grouping criteria can weaken the strength of a blend (not to mention the effect on the perceived strength of a blend of hall acoustics or microphone placements or mixing choices). Similarly, for sequential grouping, auditory stream formation involves individual instruments or a fairly strong fusion or amalgam of events that then form the streams; it requires fairly strong sequential integration of events within streams and segregation of events into different streams. A looser grouping obtains in the case of surface texture integration where it is clear that there are multiple sound sources, but it is difficult to track any given one due to registral, dynamic, or timbral similarities. Looser grouping is also a feature of stratification in which a given layer of the orchestral strata may have a single stream, two or more streams of similar sounds, an integrated surface texture, or looser blends that form homophonic harmonic textures, to name just a few possibilities. So, in terms of concurrent and sequential organization, stratification represents the highest level of the grouping hierarchy. Reconsidering the example from Borodin's In the Steppes of Central Asia in Example 18 demonstrates this complexity quite clearly with two blended streams in counterpoint in the foreground—flutes and violins in one stream, bassoons and horns 1 and 2 in the other. The sustained harmonic part of the background is realized by the other woodwinds and brass and is accompanied by a low-register, rhythmic pattern in timpani and lower strings. Thus, each layer is a composite.

Segmental Grouping

Timbral Contrasts

[6.1] Segmentation occurs when discrete changes are involved in one or more musical parameters. In line with Kurby and Zacks’ (2008) model of event segmentation, a sequence of events of similar properties are grouped together, and a sudden change in one or more of these properties, followed by a timespan with events having similar properties among themselves, introduces a contrast that provokes segmentation. At a more local level, this notion has been theorized in terms of grouping preference rules by Fred Lerdahl and Ray Jackendoff (1983). Irène Deliège (1987) regroups their rules into two classes in terms of what provokes a segmentation: temporal and qualitative discontinuities. Temporal discontinuities include silent gaps and changes in articulation, duration, and tempo. Qualitative discontinuities include those in pitch, dynamics, and timbre, although timbre was not explicitly included by Lerdahl and Jackendoff but was added by Deliège. Our focus here is on the role of orchestrated timbral and registral change in perceptual segmentation, which Deliège found to be some of the stronger cues for segmentation as measured in perceptual experiments. Deliège (1989) further explored sectional segmentation as a hierarchically superior grouping of groups, which contributes to the perception of larger-scale formal organization. In works by Pierre Boulez (Éclat for fifteen instruments, 1965) and Luciano Berio (Sequenza VI for solo viola, 1967), she noted the role of changes in surface texture, register, and instrumentation in the perception of larger-scale sections and showed that the confluence of several of these parameters increased the number of segmentations reported and thus indicated the strength of the sectional boundary. Bob Snyder (2000, 193) has noted that sectional boundaries are “points of multiparametric change.” Segmental grouping’s successive temporal contrast that creates segmentation or chunking is distinguished from concurrent and sequential grouping’s temporal overlap.

[6.2] Timbral contrasts play several roles in orchestration, as shown in Example 22. The taxonomic types are distinguished by exactness of repetition and presence or absence of harmonic change. We call timbral shift a passage in which a musical pattern defined by melody and rhythm is passed in similar form from instrument to instrument, often with accompanying pitch and harmonic change. The repetition of exact musical material with an instrumentation change that simulates distance is termed timbral echo. Antiphonal [22] contrasts are achieved by the alternation of different instrumentations and orchestral registers in a call-and-response-type format with accompanying harmonic material and at times with different musical material alternating between call and response. Timbral juxtapositions involve moments where a change in orchestration is introduced to enhance a discursive or structural moment at a local level for contrasts that do not fit into the other three categories and can introduce melodic, rhythmic, and harmonic changes as well. And finally, large-scale changes in several other musical parameters along with orchestration can indicate sectional boundaries. More generally as concerns orchestration practice, sudden timbre change contributes to boundary creation, and timbre similarity contributes to the grouping of events into coherent units. Of interest to our study is the role timbre change plays at various levels of the perceptual grouping hierarchy.

Timbral Shifts

[6.3] Passages of this type can be conceived of as an orchestral “hot potato,” wherein a musical pattern is reiterated with varying orchestrations of similar prominence; that is, a repeated phrase is “passed around” the orchestra. Perceptually it is similar to the transforming blend discussed in the section on Blend and to the transforming stream integration in the section on Integration, but here it is presented in discrete timbral steps, rather than as a continuously transforming event. Example 23 from the first movement of Franz Schubert’s Symphony no. 9 in C major (“The Great”) (1825–8) involves a pattern of an ascending arpeggio with repeating dotted-quarter/eighth-note rhythm. Beginning high with full triads in C major involving flutes, oboes, clarinets, and horns, the pattern dives to unison first and second violins in C major, and then descends further to violas and cellos, which climb a C diminished 7th chord, followed by a C major arpeggio by blended bass trombone and contrabass, an F minor arpeggio by blended tenor trombone and celli, and finally an A dominant seventh chord arpeggiated by the blended bass trombone and contrabass with the seventh in the winds and upper strings. This combined timbral and harmonic progression provides a dramatic swell that is enhanced by a crescendo beginning with the entry of the violas and celli (Audio Example 18).

Example 23. Timbral shifts: Schubert, Symphony no. 9 in C major, i, mm. 594–602]

[click for audio examples and score]

Timbral Echoes

[6.4] Timbral echoing involves a repeated musical phrase or idea with different subsequent orchestrations. In this category the same musical idea is played again either in full or as a fragment, the second echoing the first in a different instrumentation. The echoing group is often scored to seem more distant or farther away than the original, and composers often use this effect in reverse—distant, then close sounding—as a sort of inverse timbral echo. Echoes with offstage or spatially distant instruments are an obvious example of this: for example, the offstage oboe in the third movement of Berlioz’s Symphonie fantastique (1830). However, at times the timbral variation itself can signal a change in distance. Rimsky-Korsakov mentions phrases in which an echo imitates the original with both a decrease in level and an effect of distance, making sure that the original and echoing instrument or combination possess some sort of “affinity” ([1912] 1964, 110). He cites examples of well-suited pairings of muted trumpet echoing material in the oboes, or flutes echoing clarinets and oboes.[23] In Example 24 from the second movement of Jean Sibelius’ Symphony no. 2 (1902), the flute (darker purple box) echoes the trumpet (lighter pink box) in a melodic passage, twice in succession (Audio Example 19). Note the change in auditory size, intensity, and timbral brightness between the two instruments.

Example 24. Timbral echo: Sibelius, Symphony no. 2 in D major, ii, mm. 120–128

[click for audio examples and score]

Antiphonal Contrasts

[6.5] This class of timbral contrasts obtains when musical materials require an alternating call-and-response pattern. The alternation is often composed with contrasting harmony such as a tonic-dominant relation between call and response, as well as a change of instrumentation and register. Examples of this type abound in the Classical era, often with stark contrasts in instrumentation corresponding to strict phrase-structural groupings. Example 25 shows a case in the second movement of Haydn’s Symphony no. 100 (“Military”) (1793) in which the call and response are elided. The pattern is first played with violas and second oboe in parallel thirds, with the flute doubling the oboe at the octave (darker pink box). It is then picked up by first and second violins coupled in thirds (lighter turquoise boxes). The pattern is played twice in succession. The harmony is provided by clarinets, bassoons, celli, and contrabasses, and the timbral alternation underscores a tonic-dominant-tonic-dominant progression in E-flat major (Audio Example 20).

Example 25. Antiphonal contrast: Haydn, Symphony no. 100 in G major (Military), ii, mm. 61–64

[click for audio examples and score]

Timbral Juxtapositions

[6.6] Composers use other timbral contrasts that do not fall into the previous three categories. Timbral juxtapositions occur when sonorities are set against another one in close succession with different instrumentations, registers, and musical textures. The materials are musical patterns of similar perceptual prominence. A striking example of a back-and-forth timbral juxtaposition between two contrasting instrument groups is found in the second movement of Jean Sibelius’ Symphony no. 2 (1902) (Example 26). The first group (darker pink boxes) is composed of bassoons, horns, and treble strings. The second group (lighter turquoise boxes) is contrasting in melodic and rhythmic character, but also in register and instrumentation. It evolves from celli through blended combinations of bassoons, celli, contrabasses, and timpani to celli and contrabasses—this last one eliding with the first group—over three alternations between these two groups. The rapid alternation of instrumental combinations adds to the frenetic texture in the passage in rehearsal section C (Audio Example 21).

Example 26. Timbral juxtapositions: Sibelius, Symphony no. 2, ii, mm. 67–75

[click for audio examples and score]

Sectional Boundaries

[6.7] Deliège (1989) has proposed that large-scale sections in music are formed on the basis of similarities in register, texture, and instrumentation (i.e., timbre), and that changes in one or more of these parameters, along with more formal considerations, create boundaries between sections. Consider an example of sectional contrast from the work of Haydn. Emily Dolan (2013a, chap. 5) explores in great detail how changes in instrumentation are used by Haydn both in such structural roles and for dramatic impact, to create what she characterizes as “incessant variety and orchestral growth” (97–99). Dolan cites several recent authors who stress the important role played by orchestration in articulating form, particularly in Haydn’s London symphonies (nos. 93–104). In many cases, strong sectional contrasts are used between smaller and larger instrumental forces. As she notes, “many of Haydn’s slow movements unfold as a working-through of opposing sonorities or textures: a slow movement will begin with tranquility and repose, and as it unfolds, a more forceful sonority—often massed orchestral sound, sometimes triumphant, other times turbulent—upsets the serenity” (2013a, 120).

[6.8] Dolan (2013b) has developed orchestral graphs to visualize instrumentation evolution over time, including large-scale sectional effects. Example 27 shows one of her graphs for the second movement of Haydn’s Symphony no. 100 (“Military”) (1793). Note the clear sectional organization related to orchestration that emerges from this representation, particularly the alternations between strings and woodwinds, with occasional punctuations of higher-dynamic tutti. Audio Example 22 plays mm. 49–70, indicated in Example 27. This passage covers changes from piano woodwinds with a horn pedal in a passage in C major to a forte tutti in C minor to piano strings and woodwinds in E-flat major with the call-response pattern between tonic (with flute, oboe, and viola) and dominant (with violins) discussed in Example 24, stopping on the first chord of the next tutti at m. 70. So orchestrational contrasts such as timbral shifts, timbral echoes, antiphonal contrasts, and timbral juxtapositions can operate at more local temporal spans, or at higher levels of structure as in sectional boundaries.

Example 27. Sectional boundaries: Dolan’s (2013b) orchestral graph of Haydn, Symphony no. 100, ii

[click for audio examples and score]

Conclusion

[7.1] It is important to emphasize the notion of hierarchical organization in the taxonomy of orchestration effects related to perceptual grouping processes (e.g., blended instruments can form a stream that exists within a foreground layer that in turn is situated within a large section defined by a sectional boundary). The taxonomy is organized from the smallest units (event formation from perceptual fusion or blending) through the connection of events into streams, textures, and layers, and finally to segmentation through contrasts. This area of study has benefitted from a combined approach of music analysis and perceptual theorizing to provide a foundation for a model based on what has been primarily example-based considerations of orchestration in treatises. Although we have focused on Western orchestral music excerpts from 1793 to 2001, the TOGE, being based on fundamental perceptual principles, is perhaps applicable to all music, regardless of style or culture.