Dance and Timbral Exploration (DaTE)

Dance and Timbral Exploration (DaTE)

Interactive Project Report

Published: December 11, 2023

Authors

Athena Loredo, Bob Pritchard [PI], and Keith Hamel (University of British Columbia)

Summary

Aims & Goals

The DaTE project investigated the use of dance tracking to control electroacoustic processing of acoustic timbres. To this end, the project involved a composer/programmer, a dancer, a flautist, and a cellist, and used the UBC-developed Kinect Controlled Artistic Sensing System (KiCASS) to track the dancer and generate movement/position data. The dance tracking and processing of audio was carried out in real time in performance, with diverse types of processing and control being used in different sections of the composed work.

Flute and cello were selected for the acoustic sources to have a broad range of pitches to work with, as well as a variety of extended techniques. Additionally, these instruments were easy to mic for audio processing. The instrumentalists had previous experience with contemporary music involving extended techniques and non-traditional notation and structures, while the dancer had experience with the KiCASS system, with dance improvisation, and with collaborating in the development of ensemble works involving contemporary music.

Results

DaTE created and presented an interactive work with a complex, variable structure in which timbre processing was controlled by a dancer’s movements and location. In the piece – Working In New Directions (WIND) – the compositional materials and their processing were controlled through the work’s four main sections, but the specific processing and timing was dependent upon the interaction of the dancer, flautist, and cellist as they made decisions based on what each was doing during performance. The outcome was a work in which acoustic processing varied from subtle to complex and was carried out in conjunction with real time decision making by the performers. The work was presented and recorded at the UBC Bang!Festival in 2023.

Resources

Kinect Controlled Artistic Sensing System (KiCASS)

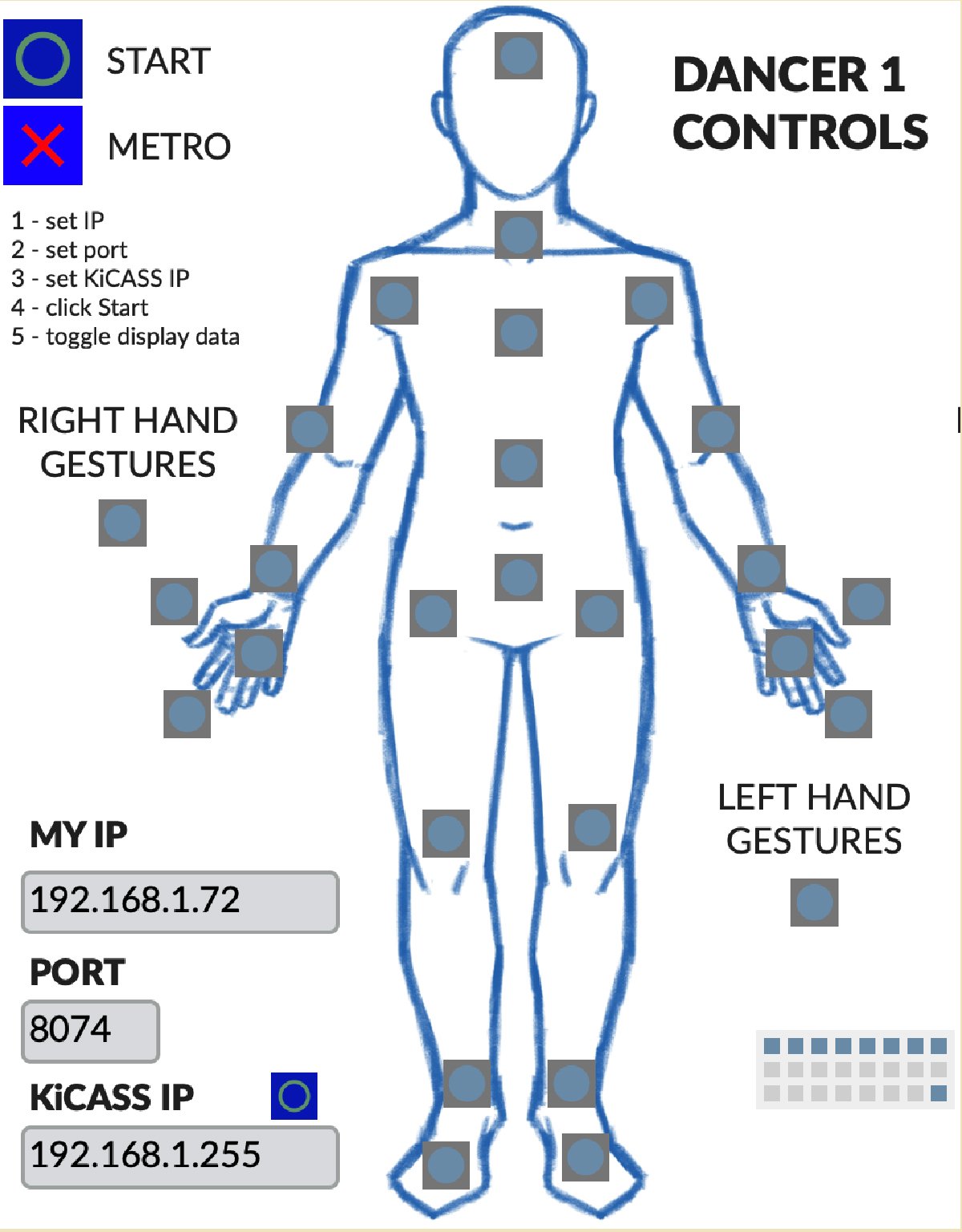

The UBC-developed KiCASS system uses the Kinect-for-Windows v.2 camera to track 18 target points in three dimensions on up to six performers. The 18 target points are shown in Example 1 as blue buttons over a body diagram. Running on a Windows computer, the system broadcasts data to client computers that have selected desired target points for tracking. In the bottom left corner of Example 1, the user sets the IP addresses for the KiCASS and client computers, as well as the local port. (See Example 2 for a diagram of the typical KiCASS setup.) The tracking area forms a quadrilateral shape, beginning about one meter in front of the KiCASS camera and extending out about six metres. The width of the tracking area is about two metres close to the camera and about six metres at its furthest point. The system is robust and has been used in dozens of performances by students and faculty at the UBC School of Music, as well as in live and networked performances in Europe, the US, South Africa, and the Philippines.

Max/MSP/Jitter

M/M/J was chosen as the software for the DaTE project since the composer/programmer had previous experience with it, it was capable of supporting the desired plugins and patchers, and it has proven to be an excellent choice for working with KiCASS data.

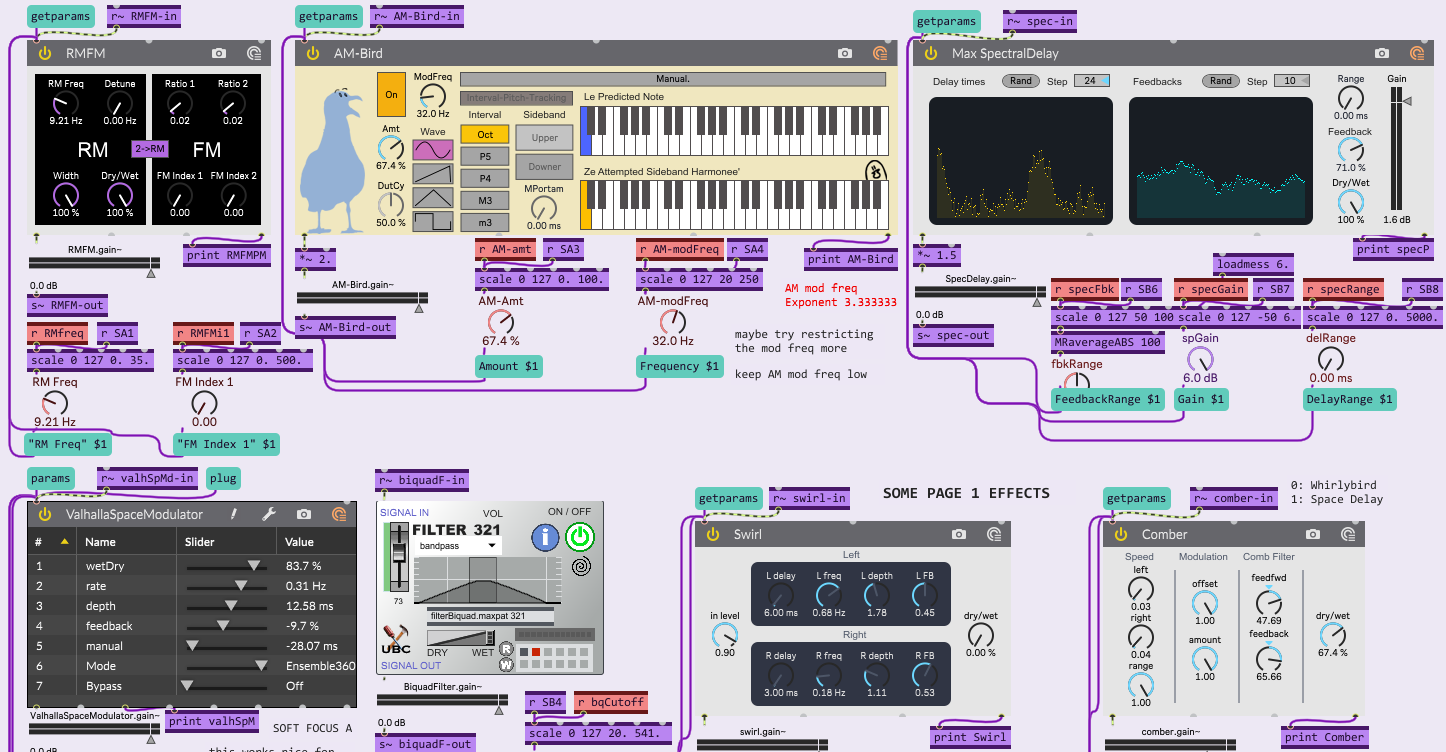

Plugins/Patchers

Several VST/AU plugins were investigated with an ear towards selecting those which would provide interesting and sophisticated processing of the instruments’ timbres and extended techniques. (See Appendix A for a list of these.) The plugins were chosen based on how well they processed long, sustained, and shorter noise-based gestures (like tongue rams and snap pizzicati). Delay-based plugins that combined different modulation types (e.g., amplitude, ring, frequency) or signal processing/synthesis (e.g., flanging, granular) were the most effective because the delay prolonged the short gesture and its processing. In addition to traditional VSTs, most audio effects consisted of Max for Live devices. These devices are built in Max/MSP and work like regular plugins in Ableton but can also be imported into Max/MSP/Jitter and used without Ableton. Because of the collaborative nature of the Max/MSP/Jitter community, many high-quality free Max for Live devices are available online, including some made by Cycling ’74 (the developer and distributor of Max/MSP/Jitter), such as the M4L devices in the bottom right of Example 3, Swirl and Comber. Using Max for Live devices was especially useful for a Windows user looking for spectral processing since many prominent spectral plugins are audio units that only work on macOS.

Facilities

Much of the programming, development, testing, practicing, and rehearsing took place in the UBC Institute for Computing, Information, and Cognitive Systems Sound Studio. This bespoke studio supports multichannel diffusion, enabling rehearsals and the performance to include eight-channel diffusion.

Research and Development

Control/testing of processes

The composer/programmer had previous experience using the KiCASS system interactively with Max/MSP/Jitter and Unity. In general, common use of KiCASS by students results in basic changes to timbre, but since DaTE explored more subtle and complex modifications, the initial research for the processing of acoustic timbres focused on the investigation of plugins and Max/MSP patches that provided sophisticated transformations of the flute and cello sounds. This research involved investigating various plugins and Max patchers, composing interesting instrumental gestures, recording those, and then testing their modification with various processes, which could be controlled using KiCASS data from the dancer. The results of this work often involved returning to the compositional process and changing passages to make them more effective. Example 4 shows a common method of creating new works with interactive systems, with two parallel cycles of work being carried out for programming and composing. Using this approach, delay-based plugins and patchers were selected for use in timbre modification, controlled using KiCASS data generated by the dancer (see list of plugins/patchers in Appendix A). Before working with the musicians and dancers, the plugins were tested using samples of extended flute and cello techniques in Ableton Live. Using Ableton Live is part of the testing and system refinement step represented in the leftmost cycle in Example 4. In Ableton Live’s Session View, while the samples were looping, plugin parameters were manually changed to simulate how a dancer’s movement data could influence these values. The parameter values chosen to be mapped onto the dancer were categorized into two groups: those that significantly altered timbre and those that produced minor changes. In addition to changes in the dry-wet mix of an audio effect, examples of significantly heard parameter changes include the amount of amplitude modulation, as well as the modulation frequencies for the ring/frequency modulation plugin. Minor parameter changes included the feedback values of the comb filter, the flanger, and the delay-time modulation. Because these effects heavily rely on delay-based processes, significant changes in parameter values either created vastly different effects (especially with the granular synthesis effect where even minor changes created significant changes) or instabilities where a parameter value reduction did not alter the sound because of prolonged delay. Having major and minor parameter changes already in mind facilitated the rehearsal process since it reduced the time needed for experimentation. Not all parameter changes that worked in Ableton translated well to the piece, so either the composition was modified to accommodate the processing, or the processing was reduced or removed. This part of the process corresponds with the testing and rehearsing step in the rightmost cycle in Example 4.

Gesture Mapping from Dance

A second aspect of the timbral processing involved working with the dancer to test which gestures could be easily and artistically used in performance to produce the required data for the control of processing. The dancer’s hand and head position were tracked, allowing her to control timbre processing through her on-stage location (head data) as well as through hand and feet gestures. Since the dancer could easily and consciously control larger movements like stage position, the significant parameter changes were mapped to this position data. The parameter values changed based on the dancer’s proximity to one of four nodes, and as the piece progresses through the sections, effects are added to the existing nodes, building a thicker texture (see Appendix B for a list of the abbreviations used on the nodes diagram). The four node regions are the circles in Example 5. Nodes 1 and 4 are larger since the area covered by the orange triangles camera blind spots and not trackable regions. The minor parameter changes were mapped to the dancer’s hands and feet. These parameter changes depended on how far the hands and feet were from the centre of the dancer’s core. This movement tracking led to the dancer/choreographer adding more gestures that involved reaching or extending but did not restrict the dancer’s ability to choreograph intricate movements. These gestures can be likened to vibrato on an acoustic instrument; it subtly alters timbre in a way that is a background or second-nature consideration for a performer.

Musical Structure

The composition has four distinct sections, but within those sections the presentation of the materials is variable. Rather than using linear and predetermined sections, it was decided to adopt a structural approach like moment or mobile forms, as used by Stockhausen, Brown, and Cage. However, given the intricacies of working with a dancer and live electroacoustic processing, more structure was required that an open form could not provide. As a result, the score reflects a “choose-your-own-adventure” text, where some directionality is implied, but the moment-to-moment decisions depend solely on the performer. In Example 6, the layout of musical material mirrors the node regions in Example 5, so the performers were asked to select their material based on the dancer’s position relative to the Kinect camera. This combination of notated instructions with performer choice resembles Sandeep Bhagwati’s technique of comprovisation, and Bhagwati himself uses comprovisation in his research-creation work involving movement and technology, NEXUS.

Example 6: The flute (above) and cello (below) parts for WIND page 3 of 4. Annotations made during the rehearsal process indicate the relationship between the score and the audio processing. For page 3 specifically, the blue and yellow shaded areas represent the major and minor harmonizer effects, respectively.

Choice of instrumentation

Regarding instrumentation, the flute and cello were selected based on a few criteria. As an aerophone and chordophone, the flute and cello have contrasting tones, timbres, and means of sound production. Both also have plenty of similar and unique sounding extended techniques (like pizzicato on the cello versus tongue rams on the flute or air sounds on the flute versus non-pitched arco sounds on the cello). Lastly, using both a soprano- and bass-range instrument increased the combined registral possibilities of the ensemble. From an early stage, extended techniques were integrated into the compositional plan. Specifically, short sounds that had noise were preferred for the first two sections. The selection of VSTs was based on these sounds since most effects would work with ordinary playing techniques.

Compositional process

On one side, part of the composition process involved composing the acoustic score using a notation program (Finale) and a vector-based design application (Vectornator). This score had to be completed early to allow performers enough time to prepare. While the score was coming together, the electroacoustic portion was being worked out in Ableton Live. Since the piece uses extended techniques and is not organized linear chronologically, typical music playback options were unavailable. Different plugins were auditioned and chosen based on how well they worked with samples of extended techniques. While the first two sections of the piece are heavily based on extended techniques, drones and ordinary playing are in the third and fourth sections, so the chosen plugins had to be effective with both types of material. In Example 7, which is a screenshot of the Ableton Live Session View of the piece, extended technique samples (tracks 1 and 2) and sustained tones (tracks 3 to 5) are routed through sends A through H, which contained the plugins that were being tested out.

Development

Early in the rehearsal process, it was decided that the performers would record a few versions of the piece with no electroacoustic processing so that the dancer could know what the piece sounded like and allow the composer to test the audio effects on passages of the piece. This approach was practical in that the dancer and performer entered group rehearsals on equal footing; neither party knew what the electroacoustic processing would consist of, and it allowed the performers to rehearse the sections and put together the piece without the extra consideration of the electronics. Once the choreography was established, challenges arose with mapping the movement tracking to the [nodes] object in Max/MSP/Jitter. The [nodes] object is highly effective at interpolating data, but it has many presets and settings that work better for some data types than others. Depending on the data set and the arrangement of the nodes in the space, there can be dead spots where the value is zero for all nodes. Because of the continuity of data needed for processing audio, dead spots would result in areas with no processing, which was beneficial for some parts of the piece. However, as the piece builds, gaps in the sounds become more noticeable. The solution was to have larger nodes that shared more intersecting areas and to interpret the generated interpolated data outside the [nodes] object to account for how different values mapped to different parameters.

Phase 4

In WIND, the first two sections establish that stage left has audible granular and spectral processing, while stage right sounds less processed in comparison (in the first section, stage right has no processing) (see 1:45-2:05 of performance). The dancer’s control of timbral processing gradually increases as the piece progresses. In the third section, in addition to adding the major and minor harmonies on the stage right nodes, they also control more extensive parameter changes in the ring/frequency and amplitude modulation plugins (see 3:40-4:10 of performance). The third page of the score also instructs players to play fragments based on the dancer’s position. Associating stage left with the short, partially pitched gestures heard in the first half contrasts the processing associated with stage right nodes. The last section of the piece has long, partially pitched gestures, like air sounds and helicopter bowing (arco diagonale), combined with the same electroacoustic processing as the third section (without the harmonizer). However, since the delay-based effects are less aurally distinct with sustained gestures, the dancer’s gestures are slower and smoother to match the subdued ending (see 5:12-5:45 of performance).

Outcomes

Regarding the dancer’s movements, the body position relative to the Kinect camera was highly effective in the piece. Not only was there a visual correlation between the position and processing, but having the peak processing associated with places on the stage was effective in rehearsal and performance. Limb tracking was used for less significant plugin parameters, so in the future, working on hand movement and positioning to explore timbral processing with smaller gestures can be an area of exploration. Although various delay-based plugins were used in the piece, the spectral and granular effects were the most effective on both the sustained and partially pitched gestures. Specifically, the kinds of timbres that could be produced with the shorter, partially pitched gestures were more diverse than using the amplitude, frequency, and ring modulation plugins. Future developments can be made by homing in on the types of spectral and granular effects that work best for specific extended techniques.

Video of Premiere Performance

Appendix

Appendix A: List of plugins and processing patches

DENPA Harmonizer by DENPA: www.denpastudio.com

Granular-to-go by Pluggo for Live, Cycling ’74

Comber by Pluggo for Live, Cycling ’74

Swirl by Pluggo for Live, Cycling ’74

SpectralDelay by Cycling ’74: https://docs.cycling74.com/max8/vignettes/spectral-processing_topic

Fm Ringmod 1.0 by Render: https://maxforlive.com/library/device/4532/fm-ringmod

AM Bird 1.0 by PraetorianCheese: snowyplovermusic.com

Valhalla Space Modulator by Valhalla DSP: valhalladsp.com

Filter Biquad by UBC Toolbox: https://www.opusonemusic.net/muset/toolbox.html

Appendix B: List of abbreviations used on the nodes object for the controllable parameters in the WIND Max/MSP/Jitter project

major – major harmonies via harmonizer

grmDel – dry/wet control for granular synth plugin

spcRng – delay and feedback control for FFT-based delay line plugin

swrlDW – dry/wet control for delay-time mod

RFi1 – frequency modulation index/depth

cmbDW – dry/wet control for comb filter

AMamt – amount of amplitude mod (in %)

valDW – dry/wet control for flanging plugin (Valhalla Space Modulator)

RMfreq – ring mod frequency (in Hz)

minor – minor harmonies via harmonizer

bqCtff – cutoff freq. for bandpass filter

References

Bhagwati, Sandeep. 2010. NEXUS for five wirelessly connected wind musicians wandering through a cityscape. https://matralab.hexagram.ca/projects/nexus/

Cage, John. 1951. Music of Changes for solo piano.

Crumb, George. 1971. Vox Balaenae for electric flute, electric cello, and amplified piano.

Stockhausen, Karlheinz. 1958-60. Kontakte for electronic sounds, piano, and percussion.